The time has finally come for me to ditch Disqus for comments. I’ll let this gif do the talking:

The downside of a free service

As the addage goes: if you are not paying for the product, you are the product. And the ad companies are the customers, vying for the ability to track you and your interests as you move around the web.

Yet for a free product that’s really easy to setup, Disqus was not too bad of a choice back in the day when first migrating to a static site. It was a really easy way to get up and running and requires really minimal configuration. It’s a huge timesaver for folks who are focused more on migrating and hosting their content, when the focus on comments is almost an afterthought.

Back in the day when I first installed Disqus, I really don’t think it was as abusive with its HTTP requests. I think I would have noticed it loading almost 100 additional HTTP requests, but then again I may have just overlooked it or only tested with my browser with its adblocking features. I think Disqus, like all things tend to do, has likely just grown larger and more out of control with time.

It’s a bit shocking to snoop around and see all the HTTP requests being made today: a ton of heavy payloads for content from Disqus servers, in addition to ad servers, Facebook (!?), and YouTube. After watching the network panel flood with requests (as you can see in the Gif above) I decided it was time to ditch Disqus and look for alternatives.

Migrating from Disqus to Commento

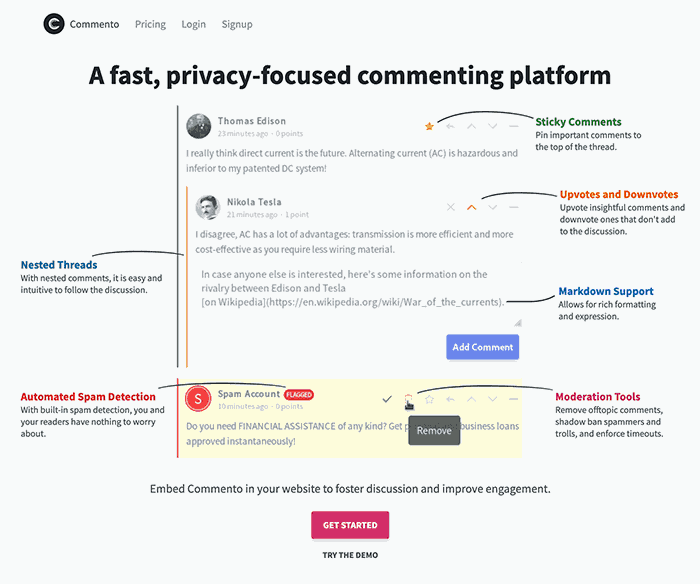

There’s a few options out there, but Commento stood out. It is by no means perfect, but it’s decent enough, and the setup is quite easy for folks willing to pay the $5/month fee. For folks who are cheap and/or not currently able to afford that monthly fee (that’s yours truly on both counts), Commento is particularly attractive because is an open-source project that has a free self-hosting option. That’s the path I started to go down.

The disadvantages of using Commento (in general)

Fair warning, Commento has a few rough edges, but it’s in active development, so I expect some of the following criticisms to become invalid with time, so do your own research!

Here’s a list of some disadvantages:

Testing locally requires modifying the clientside code in

commento.js, as Remy Sharp found.Disqus will not export user avatars, links to user websites, Disqus profile urls, or post votes. If you are self-hosting and have access to your local Commento’s database, there is a workaround which I’ve explained below with some helper code.

If your Disqus export doesn’t contain trailing slashes and your website does, there will be a mismatch and the page won’t load any comments, and it’s won’t be obvious what’s going wrong. I solved this by tweaking

commento.jsto remove trailing slashes.The comment moderation panel lives on each individual page on your own website. There’s no way to see an overview of all comments (the Commento settings page in general is a bit sparse)

There’s a weird issue with spaces getting eaten up when trying to edit comments.

Relative time formatting is a little funky. Commento displays “24 months ago” instead of a more natural “2 years ago”. This is intended, but it would be nice to make this configurable. As of now, this is another thing you can simply hack around in

commento.js

Disadvantages when self-hosting Commento

This deserves its own section, as many folks will not be going down the self-hosting road.

No built-in support for SSL. This is a big downside, as it requires extra time fiddling around setting up a proxy. This setup guide details that process using Docker containers.

Extra overhead of setting up and maintaining a server, database, HTTPS proxy, SSL cert generation/renewal, mail server (for email confirmations), and Oath keys if you want to support users loggin in through Twitter, Google, or Gitlab.

Migrating Disqus avatars, links, and votes

When you export your comments from Disqus, you receive an email link to a zipped up XML of comments. Commento uses this file to import all your old comments. However there is unfortunately missing information which would be unfortunate to lose.

For instance, my blog doesn’t get a lot of comments in general, but I have a few popular entries such as my aging post about UMD and older JavaScript modules (which now needs to be updated with ES Modules!), which has a few avatars, links to user sites, and post votes. The top post has over 70 upvotes, so it would be a pity to lose that information.

Luckily there is a way to get this information, albeit somewhat hacky (but hey, it works):

- Login to Disqus, then click on the site you want to export.

- Open your

Networktab in your web dev tools window. - Click on

Moderate commentsin the navigation on the left. - Click on the

Approvedtab on top. - Search for

listin your Network tab and copy the URL. It will look something like this:https://disqus.com/api/3.0/posts/list?attach=postModHtml&attach=postAuthorRep&limit=25&include=approved&order=desc&related=thread&related=forum&forum=[FORUM_ID_REMOVED]&start=2007-01-01T05%3A00%3A00.000Z&end=2020-01-11T04%3A59%3A59.999Z&api_key=[KEY_REMOVED]&_=1578681496399 - In your copied URL, change

limit=25tolimit=100(100 is the maximum supported by the API). This is your new “base URL”. - Request the base URL in your browser or a script.

- To request the next page of comments, inspect the current JSON and copy the contents of

cursor.next. Append this to the base url with&cursor=[STRING_YOU_COPIED_FROM_ABOVE]. Append this to the base URL to get the next page. Continue this process for each page untilcursor.nextisnull. Now you’ve fetched all the comment pages!

Sample Node.js Disqus fetch script

Below is the Node.js I made to fetch each page of Disqus comments (what I described above) and then convert the relevant bits of info into SQL statements. This worked for me but it’s not perfect and a bit hacky. Feel free to tweak it!

I may eventually move this to Github, but it’s here for now. To initialize:

mkdir disqus-migrate && cd disqus-migratenpm init(just accept all the default suggestions)npm i --save node-fetchtouch index.js- Paste the following code into

index.js(make sure to update the URL passed intoinit()with the “Base URL” from above):

| |

Now all the SQL statements you need to run are at /tmp/disqus-comments.sql. If you are like me and are running Commento and its database in a Docker container, you can copy this into the container and run the SQL with the following:

- Find the id of the container running Postgres:

docker ps

- Copy the file from your system into the Docker container:

docker cp /tmp/disqus-comments.sql POSTGRES_CONTAINER_ID:/tmp/disqus-comments.sql

- Get inside the Postgres container:

docker exec -it POSTGRES_CONTAINER_ID bash

- Run the SQL migration script:

psql -U POSTGRES_USERNAME -d commento -f /tmp/disqus-comments.sql

Phew! That should be most of it. At this point you should now see user website links and comment points (score) showing up. But no avatars yet?! What gives?

Remy’s solution of self-hosting the original Disqus avatars is probably a better solution, but you can alternatively do what I did and simply point to the original Disqus image paths. But for that to work you need to make yet another tweak to commento.js! In two places I needed to change this:

| |

To this:

| |

Which is a quick check to use the full URL if that’s what’s in the database (which is what the migrate script above did).

Phew, that was a lot! I left out a lot of steps about setting up a server on Amazon EC2, configuring the mail server, pointing the CNAME of comments.davidbcalhoun.com to the Amazon URL, setting up Docker containers, etc. You can read a bit of that in this article Jared Wolff wrote.

At this point only a few pages on my site, including this one, are using the new self-hosted Commento comments. I want to make sure everything seems ready to go, and I’m hoping to keep the EC2 instance within free limits, so hopefully after this setup it will be a truly free solution!

Comments